- Posts: 2

System crash when using more than 5 CPUs

System crash when using more than 5 CPUs

- jpacalon

-

Topic Author

Topic Author

- Offline

- Fresh Boarder

Quite new in the martini world (and gromacs too), so please bear with my begginer questions.

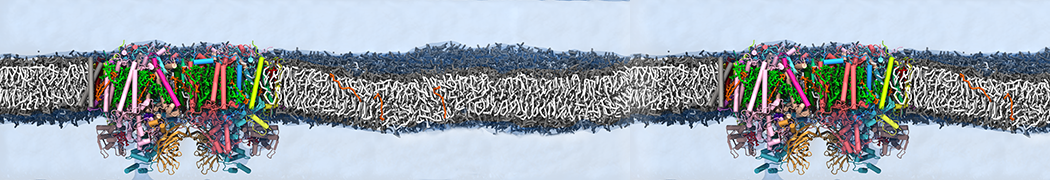

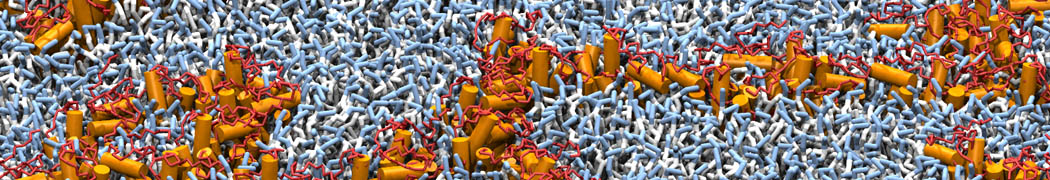

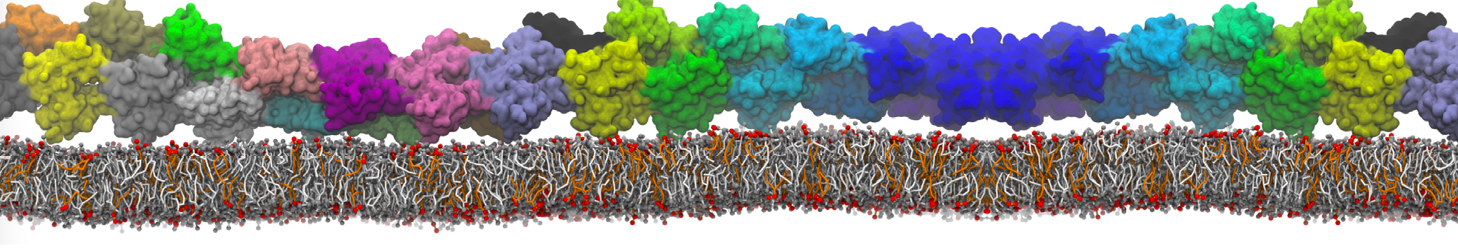

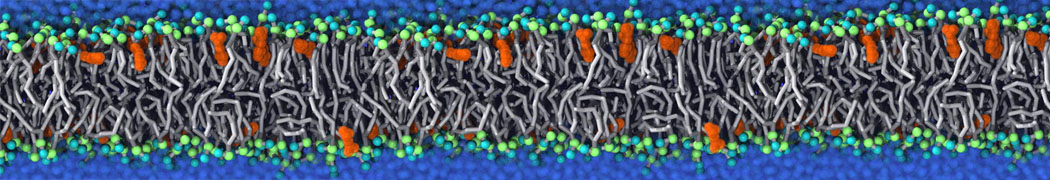

I'm trying to simulate a martini3 CG model of an HOLO homotetramer channel in active form without its ligands to see if I can observe desactivation of the channel (suing gromacs2022 gmx-mpi and openmpi4.1.2). The idea is to test without bias, with EN and with GO-like model to see difference in the approaches merging the tutorial " 3: Proteins - Part I: Basic and Martinize 2 " and " 7: Proteins Part II – TM proteins and Protein complexes " of the 2021 Martini online workshop.

When I start with the simplest model without bias, I cannot succed to have a stable system if I run on more that 5 CPUs at a time. At some point, it crash with this error message:

Step 221950:

Atom 2620 moved more than the distance allowed by the domain decomposition (1.914000) in direction Z

distance out of cell -10.677090

New coordinates: 9.209 8.167 -10.677

Old cell boundaries in direction Z: 0.000 5.849

New cell boundaries in direction Z: 0.000 5.883

I tried to do a perfect equilibration, increasing time step from 5 to 10 to 20 fs progressively, with each time 100 ns of simulation. I tried to use berensen thermostat and barostat hoping it would equilibrate better. I added a NVT step in the equilibration. Same result, it crash whenever I try to put 10 CPUs or more on the task. Currently I have a system running on 5 CPUs since 50000000 steps without crashing. But with this imbalance message :

imb F 27% step 49389700, will finish Wed Apr 13 20:39:20 2022

Any tips indicating what I'm doing bad would be much appreciated.

Below an extract of my prod.log file with details on the build of the server I'm using if it can help.

GROMACS version: 2022

Precision: mixed

Memory model: 64 bit

MPI library: MPI (CUDA-aware)

OpenMP support: enabled (GMX_OPENMP_MAX_THREADS = 128)

GPU support: CUDA

SIMD instructions: AVX_512

CPU FFT library: fftw-3.3.8-sse2-avx-avx2-avx2_128-avx512

GPU FFT library: cuFFT

RDTSCP usage: enabled

TNG support: enabled

Hwloc support: disabled

Tracing support: disabled

...

Running on 1 node with total 5 cores, 5 processing units (GPU detection failed)

Hardware detected on host compute06 (the node of MPI rank 0):

CPU info:

Vendor: Intel

Brand: Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz

Family: 6 Model: 85 Stepping: 7

Features: aes apic avx avx2 avx512f avx512cd avx512bw avx512vl avx512secondFMA clfsh cmov cx8 cx16 f16c fma hle htt intel lahf mmx msr nonstop_tsc pcid pclmuldq pdcm pdpe1gb popcnt pse rdrnd rdtscp rtm sse2 sse3 sse4.1 sse4.2 ssse3 tdt x2apic

Number of AVX-512 FMA units: 2

Hardware topology: Basic

Packages, cores, and logical processors:

[indices refer to OS logical processors]

Package 0: [ 0] [ 2] [ 4] [ 6] [ 8]

CPU limit set by OS: -1 Recommended max number of threads: 5

My prod.mdp on the system still running with 5 CPUs.

integrator = md

dt = 0.02

nsteps = 250000000 ; for 5 µs

nstxout = 0

nstvout = 0

nstfout = 0

nstlog = 1000

nstxout-compressed = 1000

compressed-x-precision = 100

cutoff-scheme = Verlet

nstlist = 20

ns_type = grid

pbc = xyz

verlet-buffer-tolerance = 0.005

coulombtype = reaction-field

rcoulomb = 1.1

epsilon_r = 15 ; 2.5 (with polarizable water)

epsilon_rf = 0

vdw_type = cutoff

vdw-modifier = Potential-shift-verlet

rvdw = 1.1

tcoupl = v-rescale

tc-grps = Protein_POPC W_ION

tau-t = 1.0 1.0

ref-t = 300 300

continuation = yes

gen-vel = no

Pcoupl = parrinello-rahman

Pcoupltype = semiisotropic

tau-p = 12.0

compressibility = 3e-4 3e-4

ref-p = 1.0 1.0

Bests,

Jody

Please Log in or Create an account to join the conversation.